一、ELK简介

ELK是三个开源软件的缩写,分别表示:Elasticsearch , Logstash, Kibana , 它们都是开源软件。新增了一个FileBeat,它是一个轻量级的日志收集处理工具(Agent),Filebeat占用资源少,适合于在各个服务器上搜集日志后传输给Logstash,官方也推荐此工具。

1、Elasticsearch是个开源分布式搜索引擎,提供搜集、分析、存储数据三大功能。它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。文章源自小柒网-https://www.yangxingzhen.cn/8302.html

2、Logstash 主要是用来日志的搜集、分析、过滤日志的工具,支持大量的数据获取方式。一般工作方式为c/s架构,client端安装在需要收集日志的主机上,server端负责将收到的各节点日志进行过滤、修改等操作在一并发往elasticsearch上去。文章源自小柒网-https://www.yangxingzhen.cn/8302.html

3、Kibana 也是一个开源和免费的工具,Kibana可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助汇总、分析和搜索重要数据日志。文章源自小柒网-https://www.yangxingzhen.cn/8302.html

4、Filebeat隶属于Beats。目前Beats包含四种工具:文章源自小柒网-https://www.yangxingzhen.cn/8302.html

1)Packetbeat(搜集网络流量数据)文章源自小柒网-https://www.yangxingzhen.cn/8302.html

2)Topbeat(搜集系统、进程和文件系统级别的CPU和内存使用情况等数据)文章源自小柒网-https://www.yangxingzhen.cn/8302.html

3)Filebeat(搜集文件数据)文章源自小柒网-https://www.yangxingzhen.cn/8302.html

4)Winlogbeat(搜集Windows事件日志数据)文章源自小柒网-https://www.yangxingzhen.cn/8302.html

一、系统架构

| IP |

角色文章源自小柒网-https://www.yangxingzhen.cn/8302.html |

主机名文章源自小柒网-https://www.yangxingzhen.cn/8302.html |

Pod |

| 10.10.50.114 |

Master |

cmp-k8s-dev-master01 |

无 |

| 10.10.50.123 |

Node |

cmp-k8s-dev-node01 |

elasticsear-master,zookeeper,kafka,filebeat |

| 10.10.50.124 |

Node |

cmp-k8s-dev-node02 |

elasticsear-master,zookeeper,kafka,filebeat |

| 10.10.50.125 |

Node |

cmp-k8s-dev-node03 |

elasticsear-master,zookeeper,kafka,filebeat |

| 10.10.50.127 |

Node |

cmp-k8s-dev-node04 |

filebeat |

| 10.10.50.128 |

Node |

cmp-k8s-dev-node05 |

filebeat |

| 10.10.50.129 |

Node |

cmp-k8s-dev-node06 |

filebeat、kibana、logstash |

注意点:配置文件中hostname修改成自己的

二、创建StorageClass、Namespace

1、创建StorageClass

[root@cmp-k8s-dev-node01 ~]# mkdir -p /data/{master,kibana,zookeeper,kafka}

[root@cmp-k8s-dev-node02 ~]# mkdir -p /data/{master,kibana,zookeeper,kafka}

[root@cmp-k8s-dev-node03 ~]# mkdir -p /data/{master,kibana,zookeeper,kafka}

[root@cmp-k8s-dev-node01 ~]# mkdir kube-elasticsearch && cd kube-elasticsearch

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-storageclass.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: elasticsearch-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-storageclass.yaml

2、创建Namespace

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kube-elasticsearch

labels:

app: elasticsearch

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-namespace.yaml

3、创建证书

参考:https://github.com/elastic/helm-charts/blob/master/elasticsearch/examples/security/Makefile#L24-L35

[root@cmp-k8s-dev-master01 kube-elasticsearch]# docker run --name elastic-certs -i -w /tmp docker.elastic.co/elasticsearch/elasticsearch:7.17.6 /bin/sh -c \

"elasticsearch-certutil ca --out /tmp/es-ca.p12 --pass '' && \

elasticsearch-certutil cert --name security-master --dns \

security-master --ca /tmp/es-ca.p12 --pass '' --ca-pass '' --out /tmp/elastic-certificates.p12"

[root@cmp-k8s-dev-master01 kube-elasticsearch]# docker cp elastic-certs:/tmp/elastic-certificates.p12 ./

4、创建ssl认证要使用的secret

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl -n kube-elasticsearch create secret generic elastic-certificates --from-file=./elastic-certificates.p12

三、部署Elasticsearch集群

1、创建3个Master节点的PV

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-pv-master.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-elasticsearch-pv-0

namespace: kube-elasticsearch

labels:

name: local-elasticsearch-pv-0

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: elasticsearch-storage

local:

path: /data/master

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cmp-k8s-dev-node01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-elasticsearch-pv-1

namespace: kube-elasticsearch

labels:

name: local-elasticsearch-pv-1

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: elasticsearch-storage

local:

path: /data/master

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cmp-k8s-dev-node02

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-elasticsearch-pv-2

namespace: kube-elasticsearch

labels:

name: local-elasticsearch-pv-2

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: elasticsearch-storage

local:

path: /data/master

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cmp-k8s-dev-node03

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-pv-master.yaml

persistentvolume/local-elasticsearch-pv-0 created

persistentvolume/local-elasticsearch-pv-1 created

persistentvolume/local-elasticsearch-pv-2 created

2、创建Elasticsearch的StatefulSet

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-master-deploy.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: kube-elasticsearch

name: elasticsearch-master

labels:

app: elasticsearch

role: master

spec:

serviceName: elasticsearch-master

replicas: 3

selector:

matchLabels:

app: elasticsearch

role: master

template:

metadata:

labels:

app: elasticsearch

role: master

spec:

containers:

- name: elasticsearch

image: elasticsearch:7.17.6

command: ["bash", "-c", "ulimit -l unlimited && sysctl -w vm.max_map_count=262144 && chown -R elasticsearch:elasticsearch /usr/share/elasticsearch/data && exec su elasticsearch docker-entrypoint.sh"]

ports:

- containerPort: 9200

name: http

- containerPort: 9300

name: transport

env:

- name: discovery.seed_hosts

value: "elasticsearch-master-0.elasticsearch-master,elasticsearch-master-1.elasticsearch-master,elasticsearch-master-2.elasticsearch-master"

- name: cluster.initial_master_nodes

value: "elasticsearch-master-0,elasticsearch-master-1,elasticsearch-master-2"

- name: ES_JAVA_OPTS

value: -Xms512m -Xmx512m

- name: node.master

value: "true"

- name: node.ingest

value: "true"

- name: node.data

value: "true"

- name: cluster.name

value: "elasticsearch"

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: xpack.security.enabled

value: "true"

- name: xpack.security.transport.ssl.enabled

value: "true"

- name: xpack.monitoring.collection.enabled

value: "true"

- name: xpack.security.transport.ssl.verification_mode

value: "certificate"

- name: xpack.security.transport.ssl.keystore.path

value: "/usr/share/elasticsearch/config/elastic-certificates.p12"

- name: xpack.security.transport.ssl.truststore.path

value: "/usr/share/elasticsearch/config/elastic-certificates.p12"

volumeMounts:

- mountPath: /usr/share/elasticsearch/data

name: elasticsearch-pv-master

- name: elastic-certificates

readOnly: true

mountPath: "/usr/share/elasticsearch/config/elastic-certificates.p12"

subPath: elastic-certificates.p12

- mountPath: /etc/localtime

name: localtime

securityContext:

privileged: true

volumes:

- name: elastic-certificates

secret:

secretName: elastic-certificates

- hostPath:

path: /etc/localtime

name: localtime

volumeClaimTemplates:

- metadata:

name: elasticsearch-pv-master

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "elasticsearch-storage"

resources:

requests:

storage: 10Gi

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-master-deploy.yaml

statefulset.apps/elasticsearch-master created

3、创建ES集群的Service

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-svc.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-elasticsearch

name: elasticsearch-master

labels:

app: elasticsearch

role: master

spec:

selector:

app: elasticsearch

role: master

type: NodePort

ports:

- port: 9200

nodePort: 30000

targetPort: 9200

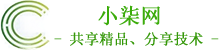

4、配置ES集群密码

方式一:自动生成随机密码并打印终端

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl -n kube-elasticsearch exec -it $(kubectl -n kube-elasticsearch get pods | grep elasticsearch-master | sed -n 1p | awk '{print $1}') -- bin/elasticsearch-setup-passwords auto -b

方式二:自行配置密码

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl -n kube-elasticsearch exec -it $(kubectl -n kube-elasticsearch get pods | grep elasticsearch-master | sed -n 1p | awk '{print $1}') -- bin/elasticsearch-setup-passwords interactive

# 为了测试方便,密码设置为Aa123456

注意:自行选择一种配置密码方式,请牢记上面的密码,后面需要用到。

四、部署Kibana

1、创建secret,用于存储ES密码

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl -n kube-elasticsearch create secret generic elasticsearch-password --from-literal password=Aa123456

2、创建Kibana的Configmap

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-kibana-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: kube-elasticsearch

name: kibana-config

labels:

app: kibana

data:

kibana.yml: |-

server.host: 0.0.0.0

elasticsearch:

hosts: ${ELASTICSEARCH_HOSTS}

username: ${ELASTICSEARCH_USER}

password: ${ELASTICSEARCH_PASSWORD}

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-kibana-configmap.yaml

3、创建Kibana的Deployment

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-kibana-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: kibana

name: kibana

namespace: kube-elasticsearch

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: kibana:7.17.6

ports:

- containerPort: 5601

protocol: TCP

env:

- name: SERVER_PUBLICBASEURL

value: "http://0.0.0.0:5601"

- name: I18N.LOCALE

value: zh-CN

- name: ELASTICSEARCH_HOSTS

value: "http://elasticsearch-master:9200"

- name: ELASTICSEARCH_USER

value: "elastic"

- name: ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elasticsearch-password

key: password

- name: xpack.encryptedSavedObjects.encryptionKey

value: "min-32-byte-long-strong-encryption-key"

volumeMounts:

- name: kibana-config

mountPath: /usr/share/kibana/config/kibana.yml

readOnly: true

subPath: kibana.yml

- mountPath: /etc/localtime

name: localtime

volumes:

- name: kibana-config

configMap:

name: kibana-config

- hostPath:

path: /etc/localtime

name: localtime

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-kibana-deploy.yaml

4、创建Kibana的Service

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-kibana-svc.yaml

kind: Service

apiVersion: v1

metadata:

labels:

app: kibana

name: kibana-service

namespace: kube-elasticsearch

spec:

ports:

- port: 5601

targetPort: 5601

nodePort: 30002

type: NodePort

selector:

app: kibana

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-kibana-svc.yaml

五、部署Zookeepe集群

1、创建Zookeeper的PV

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-zookeeper-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-elasticsearch-zk-0

namespace: kube-elasticsearch

labels:

name: local-elasticsearch-zk-0

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: elasticsearch-storage

local:

path: /data/zookeeper

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cmp-k8s-dev-node01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-elasticsearch-zk-1

namespace: kube-elasticsearch

labels:

name: local-elasticsearch-zk-1

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: elasticsearch-storage

local:

path: /data/zookeeper

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cmp-k8s-dev-node02

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-elasticsearch-zk-2

namespace: kube-elasticsearch

labels:

name: local-elasticsearch-zk-2

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: elasticsearch-storage

local:

path: /data/zookeeper

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cmp-k8s-dev-node03

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-zookeeper-pv.yaml

persistentvolume/local-elasticsearch-zk-0 created

persistentvolume/local-elasticsearch-zk-1 created

persistentvolume/local-elasticsearch-zk-2 created

2、创建Zookeeper的PodDisruptionBudget

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-zookeeper-pdb.yaml

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: zookeeper-pdb

namespace: kube-elasticsearch

spec:

selector:

matchLabels:

app: zookeeper

minAvailable: 2

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-zookeeper-pdb.yaml

poddisruptionbudget.policy/zookeeper-pdb created

3、创建Zookeeper的PV

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-zookeeper-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: kube-elasticsearch

labels:

app: zookeeper

spec:

type: NodePort

ports:

- port: 2181

nodePort: 30004

targetPort: 2181

selector:

app: zookeeper

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-zookeeper-svc.yaml

service/zookeeper created

4、创建Zookeeper的StatefulSet

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-zookeeper-deploy.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zookeeper

namespace: kube-elasticsearch

spec:

selector:

matchLabels:

app: zookeeper

serviceName: zookeeper

replicas: 3

updateStrategy:

type: RollingUpdate

podManagementPolicy: Parallel

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

app: zookeeper

spec:

containers:

- name: kubernetes-zookeeper

imagePullPolicy: IfNotPresent

image: "mirrorgooglecontainers/kubernetes-zookeeper:1.0-3.4.10"

ports:

- containerPort: 2181

name: client

- containerPort: 2888

name: server

- containerPort: 3888

name: leader-election

command:

- sh

- -c

- "start-zookeeper \

--servers=3 \

--data_dir=/var/lib/zookeeper/data \

--data_log_dir=/var/lib/zookeeper/data/log \

--conf_dir=/opt/zookeeper/conf \

--client_port=2181 \

--election_port=3888 \

--server_port=2888 \

--tick_time=2000 \

--init_limit=10 \

--sync_limit=5 \

--heap=512M \

--max_client_cnxns=60 \

--snap_retain_count=3 \

--purge_interval=12 \

--max_session_timeout=40000 \

--min_session_timeout=4000 \

--log_level=INFO"

readinessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

livenessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

volumeMounts:

- name: zookeeper

mountPath: /var/lib/zookeeper

securityContext:

runAsUser: 1000

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: zookeeper

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "elasticsearch-storage"

resources:

requests:

storage: 1Gi

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-zookeeper-deploy.yaml

statefulset.apps/zookeeper created

六、部署Kafka集群

1、创建kafka的PV

[root@cmp-k8s-dev-master01 kube-elasticsearch]# cat 00-pv-kafka.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-elasticsearch-kafka-0

namespace: kube-elasticsearch

labels:

name: local-elasticsearch-kafka-0

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: elasticsearch-storage

local:

path: /data/kafka

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cmp-k8s-dev-node01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-elasticsearch-kafka-1

namespace: kube-elasticsearch

labels:

name: local-elasticsearch-kafka-1

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: elasticsearch-storage

local:

path: /data/kafka

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cmp-k8s-dev-node02

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-elasticsearch-kafka-2

namespace: kube-elasticsearch

labels:

name: local-elasticsearch-kafka-2

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: elasticsearch-storage

local:

path: /data/kafka

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- cmp-k8s-dev-node03

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-kafka-pv.yaml

persistentvolume/local-elasticsearch-kafka-0 created

persistentvolume/local-elasticsearch-kafka-1 created

persistentvolume/local-elasticsearch-kafka-2 created

2、创建Kafka的PodDisruptionBudget

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-kafka-pdb.yaml

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: kafka-pdb

namespace: kube-elasticsearch

spec:

selector:

matchLabels:

app: kafka

maxUnavailable: 1

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-kafka-pdb.yaml

poddisruptionbudget.policy/kafka-pdb created

3、创建Kafka的Service

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-kafka-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kafka

namespace: kube-elasticsearch

labels:

app: kafka

spec:

type: NodePort

ports:

- port: 9092

nodePort: 30001

targetPort: 9092

selector:

app: kafka

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-kafka-svc.yaml

service/kafka created

4、创建Kafka的StatefulSet

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-kafka-deploy.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: kafka

namespace: kube-elasticsearch

spec:

selector:

matchLabels:

app: kafka

serviceName: kafka

replicas: 3

template:

metadata:

labels:

app: kafka

spec:

terminationGracePeriodSeconds: 300

containers:

- name: k8s-kafka

imagePullPolicy: IfNotPresent

image: fastop/kafka:2.2.0

resources:

requests:

memory: "600Mi"

cpu: 500m

ports:

- containerPort: 9092

name: server

command:

- sh

- -c

- "exec kafka-server-start.sh /opt/kafka/config/server.properties --override broker.id=${HOSTNAME##*-} \

--override listeners=PLAINTEXT://:9092 \

--override zookeeper.connect=zookeeper.kube-elasticsearch.svc.cluster.local:2181 \

--override log.dir=/var/lib/kafka \

--override auto.create.topics.enable=true \

--override auto.leader.rebalance.enable=true \

--override background.threads=10 \

--override compression.type=producer \

--override delete.topic.enable=false \

--override leader.imbalance.check.interval.seconds=300 \

--override leader.imbalance.per.broker.percentage=10 \

--override log.flush.interval.messages=9223372036854775807 \

--override log.flush.offset.checkpoint.interval.ms=60000 \

--override log.flush.scheduler.interval.ms=9223372036854775807 \

--override log.retention.bytes=-1 \

--override log.retention.hours=168 \

--override log.roll.hours=168 \

--override log.roll.jitter.hours=0 \

--override log.segment.bytes=1073741824 \

--override log.segment.delete.delay.ms=60000 \

--override message.max.bytes=1000012 \

--override min.insync.replicas=1 \

--override num.io.threads=8 \

--override num.network.threads=3 \

--override num.recovery.threads.per.data.dir=1 \

--override num.replica.fetchers=1 \

--override offset.metadata.max.bytes=4096 \

--override offsets.commit.required.acks=-1 \

--override offsets.commit.timeout.ms=5000 \

--override offsets.load.buffer.size=5242880 \

--override offsets.retention.check.interval.ms=600000 \

--override offsets.retention.minutes=1440 \

--override offsets.topic.compression.codec=0 \

--override offsets.topic.num.partitions=50 \

--override offsets.topic.replication.factor=3 \

--override offsets.topic.segment.bytes=104857600 \

--override queued.max.requests=500 \

--override quota.consumer.default=9223372036854775807 \

--override quota.producer.default=9223372036854775807 \

--override replica.fetch.min.bytes=1 \

--override replica.fetch.wait.max.ms=500 \

--override replica.high.watermark.checkpoint.interval.ms=5000 \

--override replica.lag.time.max.ms=10000 \

--override replica.socket.receive.buffer.bytes=65536 \

--override replica.socket.timeout.ms=30000 \

--override request.timeout.ms=30000 \

--override socket.receive.buffer.bytes=102400 \

--override socket.request.max.bytes=104857600 \

--override socket.send.buffer.bytes=102400 \

--override unclean.leader.election.enable=true \

--override zookeeper.session.timeout.ms=6000 \

--override zookeeper.set.acl=false \

--override broker.id.generation.enable=true \

--override connections.max.idle.ms=600000 \

--override controlled.shutdown.enable=true \

--override controlled.shutdown.max.retries=3 \

--override controlled.shutdown.retry.backoff.ms=5000 \

--override controller.socket.timeout.ms=30000 \

--override default.replication.factor=1 \

--override fetch.purgatory.purge.interval.requests=1000 \

--override group.max.session.timeout.ms=300000 \

--override group.min.session.timeout.ms=6000 \

--override inter.broker.protocol.version=2.2.0 \

--override log.cleaner.backoff.ms=15000 \

--override log.cleaner.dedupe.buffer.size=134217728 \

--override log.cleaner.delete.retention.ms=86400000 \

--override log.cleaner.enable=true \

--override log.cleaner.io.buffer.load.factor=0.9 \

--override log.cleaner.io.buffer.size=524288 \

--override log.cleaner.io.max.bytes.per.second=1.7976931348623157E308 \

--override log.cleaner.min.cleanable.ratio=0.5 \

--override log.cleaner.min.compaction.lag.ms=0 \

--override log.cleaner.threads=1 \

--override log.cleanup.policy=delete \

--override log.index.interval.bytes=4096 \

--override log.index.size.max.bytes=10485760 \

--override log.message.timestamp.difference.max.ms=9223372036854775807 \

--override log.message.timestamp.type=CreateTime \

--override log.preallocate=false \

--override log.retention.check.interval.ms=300000 \

--override max.connections.per.ip=2147483647 \

--override num.partitions=4 \

--override producer.purgatory.purge.interval.requests=1000 \

--override replica.fetch.backoff.ms=1000 \

--override replica.fetch.max.bytes=1048576 \

--override replica.fetch.response.max.bytes=10485760 \

--override reserved.broker.max.id=1000 "

env:

- name: KAFKA_HEAP_OPTS

value : "-Xmx512M -Xms512M"

- name: KAFKA_OPTS

value: "-Dlogging.level=INFO"

volumeMounts:

- name: kafka

mountPath: /var/lib/kafka

readinessProbe:

tcpSocket:

port: 9092

timeoutSeconds: 1

initialDelaySeconds: 5

securityContext:

runAsUser: 1000

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: kafka

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "elasticsearch-storage"

resources:

requests:

storage: 1Gi

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-kafka-deploy.yaml

statefulset.apps/kafka configured

5、测试Kafka

1)通过Zookeeper查看broker

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl exec -it zookeeper-1 -n kube-elasticsearch -- bash

zookeeper@zookeeper-1:/$ zkCli.sh

[zk: localhost:2181(CONNECTED) 0] get /brokers/ids/0

{"listener_security_protocol_map":{"PLAINTEXT":"PLAINTEXT"},"endpoints":["PLAINTEXT://kafka-0.kafka.kube-elasticsearch.svc.cluster.local:9092"],"jmx_port":-1,"host":"kafka-0.kafka.kube-elasticsearch.svc.cluster.local","timestamp":"1666318702856","port":9092,"version":4}

cZxid = 0x100000051

ctime = Fri Oct 21 02:18:15 UTC 2022

mZxid = 0x100000051

mtime = Fri Oct 21 02:18:15 UTC 2022

pZxid = 0x100000051

cversion = 0

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x183f8472ae40000

dataLength = 270

numChildren = 0

[zk: localhost:2181(CONNECTED) 1] get /brokers/ids/1

{"listener_security_protocol_map":{"PLAINTEXT":"PLAINTEXT"},"endpoints":["PLAINTEXT://kafka-1.kafka.kube-elasticsearch.svc.cluster.local:9092"],"jmx_port":-1,"host":"kafka-1.kafka.kube-elasticsearch.svc.cluster.local","timestamp":"1666318713387","port":9092,"version":4}

cZxid = 0x100000063

ctime = Fri Oct 21 02:18:25 UTC 2022

mZxid = 0x100000063

mtime = Fri Oct 21 02:18:25 UTC 2022

pZxid = 0x100000063

cversion = 0

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x183f8472ae40001

dataLength = 270

numChildren = 0

[zk: localhost:2181(CONNECTED) 2] get /brokers/ids/2

{"listener_security_protocol_map":{"PLAINTEXT":"PLAINTEXT"},"endpoints":["PLAINTEXT://kafka-2.kafka.kube-elasticsearch.svc.cluster.local:9092"],"jmx_port":-1,"host":"kafka-2.kafka.kube-elasticsearch.svc.cluster.local","timestamp":"1666318715645","port":9092,"version":4}

cZxid = 0x100000073

ctime = Fri Oct 21 02:18:35 UTC 2022

mZxid = 0x100000073

mtime = Fri Oct 21 02:18:35 UTC 2022

pZxid = 0x100000073

cversion = 0

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x183f8472ae40002

dataLength = 270

numChildren = 0

2)Kafka生产消费测试

# 创建topic

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl exec -it kafka-0 -n kube-elasticsearch -- bash

kafka@kafka-0:/$ cd /opt/kafka/bin

kafka@kafka-0:/opt/kafka/bin$ ./kafka-topics.sh --create --topic test --zookeeper zookeeper.kube-elasticsearch.svc.cluster.local:2181 --partitions 3 --replication-factor 3

Created topic test.

kafka@kafka-0:/opt/kafka/bin$ ./kafka-topics.sh --list --zookeeper zookeeper.kube-elasticsearch.svc.cluster.local:2181

test

# 生产消息

kafka@kafka-0:/opt/kafka/bin$ ./kafka-console-producer.sh --topic test --broker-list kafka-0.kafka.kube-elasticsearch.svc.cluster.local:9092

>123

>456

>789

# 起一个终端消费消息

kafka@kafka-0:/opt/kafka/bin$ ./kafka-console-consumer.sh --bootstrap-server kafka-0.kafka.kube-elasticsearch.svc.cluster.local:9092 --topic test --from-beginning

123

456

789

从以上结果看出消费是正常的!

七、部署Filebeat

注:Filebeat使用DemoSet,并且是自动发现日志模式

1、创建Filebeat的configmap

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-filebeat-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: kube-elasticsearch

labels:

k8s-app: filebeat

data:

filebeat.yml: |

filebeat.inputs:

- type: container

paths:

- '/var/lib/docker/containers/*/*.log'

processors:

- add_kubernetes_metadata:

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/lib/docker/containers/"

processors:

- add_cloud_metadata:

- add_host_metadata:

output:

kafka:

enabled: true

hosts: ["kafka-0.kafka.kube-elasticsearch.svc.cluster.local:9092","kafka-1.kafka.kube-elasticsearch.svc.cluster.local:9092","kafka-2.kafka.kube-elasticsearch.svc.cluster.local:9092"]

topic: "filebeat"

max_message_bytes: 5242880

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-filebeat-configmap.yaml

configmap/filebeat-config created

2、创建Filebeat的DaemonSet

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-filebeat-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-elasticsearch

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: filebeat

image: docker.elastic.co/beats/filebeat:7.17.6

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

securityContext:

runAsUser: 0

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0640

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-filebeat-daemonset.yaml

daemonset.apps/filebeat created

3、创建Filebeat的ClusterRoleBinding

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-filebeat-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-elasticsearch

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: filebeat

namespace: kube-elasticsearch

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-elasticsearch

roleRef:

kind: Role

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: filebeat-kubeadm-config

namespace: kube-elasticsearch

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-elasticsearch

roleRef:

kind: Role

name: filebeat-kubeadm-config

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

- nodes

verbs:

- get

- watch

- list

- apiGroups: ["apps"]

resources:

- replicasets

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: filebeat

namespace: kube-elasticsearch

labels:

k8s-app: filebeat

rules:

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs: ["get", "create", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: filebeat-kubeadm-config

namespace: kube-elasticsearch

labels:

k8s-app: filebeat

rules:

- apiGroups: [""]

resources:

- configmaps

resourceNames:

- kubeadm-config

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: kube-elasticsearch

labels:

k8s-app: filebeat

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-filebeat-rbac.yaml

clusterrolebinding.rbac.authorization.k8s.io/filebeat created

rolebinding.rbac.authorization.k8s.io/filebeat created

rolebinding.rbac.authorization.k8s.io/filebeat-kubeadm-config created

clusterrole.rbac.authorization.k8s.io/filebeat created

role.rbac.authorization.k8s.io/filebeat created

role.rbac.authorization.k8s.io/filebeat-kubeadm-config created

serviceaccount/filebeat created

八、部署Logstash

1、创建Logstash的configmap

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-logstash-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-configmap

namespace: kube-elasticsearch

data:

logstash.yml: |

http.host: "0.0.0.0"

path.config: /usr/share/logstash/pipeline

logstash.conf: |

input {

kafka {

bootstrap_servers => "kafka-0.kafka.kube-elasticsearch.svc.cluster.local:9092,kafka-1.kafka.kube-elasticsearch.svc.cluster.local:9092,kafka-2.kafka.kube-elasticsearch.svc.cluster.local:9092"

topics => ["filebeat"]

codec => "json"

}

}

filter {

date {

match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

}

}

output {

elasticsearch {

hosts => ["elasticsearch-master:9200"]

user => "elastic"

password => "Aa123456"

index => "kubernetes-%{+YYYY.MM.dd}"

}

}

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-logstash-configmap.yaml

configmap/logstash-configmap created

2、创建Logstash的Deployment

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-logstash-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: logstash-deployment

namespace: kube-elasticsearch

spec:

selector:

matchLabels:

app: logstash

replicas: 1

template:

metadata:

labels:

app: logstash

spec:

containers:

- name: logstash

image: docker.elastic.co/logstash/logstash:7.17.6

ports:

- containerPort: 5044

volumeMounts:

- name: config-volume

mountPath: /usr/share/logstash/config

- name: logstash-pipeline-volume

mountPath: /usr/share/logstash/pipeline

- mountPath: /etc/localtime

name: localtime

volumes:

- name: config-volume

configMap:

name: logstash-configmap

items:

- key: logstash.yml

path: logstash.yml

- name: logstash-pipeline-volume

configMap:

name: logstash-configmap

items:

- key: logstash.conf

path: logstash.conf

- hostPath:

path: /etc/localtime

name: localtime

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-logstash-deployment.yaml

deployment.apps/logstash-deployment created

3、创建Logstash的Service

[root@cmp-k8s-dev-master01 kube-elasticsearch]# vim kube-elasticsearch-logstash-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: logstash-service

namespace: kube-elasticsearch

spec:

selector:

app: logstash

type: NodePort

ports:

- protocol: TCP

port: 5044

targetPort: 5044

nodePort: 30003

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl apply -f kube-elasticsearch-logstash-svc.yaml

service/logstash-service created

4、查看创建完成的资源清单

[root@cmp-k8s-dev-master01 kube-elasticsearch]# kubectl get all -n kube-elasticsearch

NAME READY STATUS RESTARTS AGE

pod/elasticsearch-master-0 1/1 Running 0 18h

pod/elasticsearch-master-1 1/1 Running 0 18h

pod/elasticsearch-master-2 1/1 Running 0 18h

pod/filebeat-4qlmq 1/1 Running 0 23m

pod/filebeat-7722b 1/1 Running 0 23m

pod/filebeat-86dfp 1/1 Running 0 23m

pod/filebeat-c9cxr 1/1 Running 0 23m

pod/filebeat-rvvs5 1/1 Running 0 23m

pod/filebeat-tlmm7 1/1 Running 0 23m

pod/kafka-0 1/1 Running 0 68m

pod/kafka-1 1/1 Running 0 68m

pod/kafka-2 1/1 Running 0 67m

pod/kibana-7489b785c5-h22zw 1/1 Running 0 17h

pod/logstash-deployment-749d4fd95c-p6rs7 1/1 Running 0 3m13s

pod/zookeeper-0 1/1 Running 0 85m

pod/zookeeper-1 1/1 Running 0 85m

pod/zookeeper-2 1/1 Running 0 85m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elasticsearch-master NodePort 172.10.95.136 <none> 9200:30000/TCP 18h

service/kafka NodePort 172.10.252.178 <none> 9092:30001/TCP 80m

service/kibana-service NodePort 172.10.103.201 <none> 5601:30002/TCP 17h

service/logstash-service NodePort 172.10.120.74 <none> 5044:30003/TCP 5m28s

service/zookeeper NodePort 172.10.20.238 <none> 2181:30004/TCP 119m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/filebeat 6 6 6 6 6 <none> 26m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/kibana 1/1 1 1 17h

deployment.apps/logstash-deployment 1/1 1 1 3m13s

NAME DESIRED CURRENT READY AGE

replicaset.apps/kibana-7489b785c5 1 1 1 17h

replicaset.apps/logstash-deployment-749d4fd95c 1 1 1 3m13s

NAME READY AGE

statefulset.apps/elasticsearch-master 3/3 18h

statefulset.apps/kafka 3/3 68m

statefulset.apps/zookeeper 3/3 85m

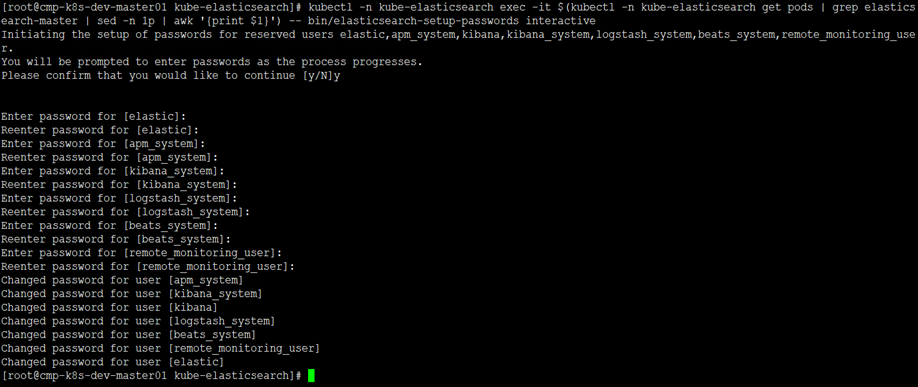

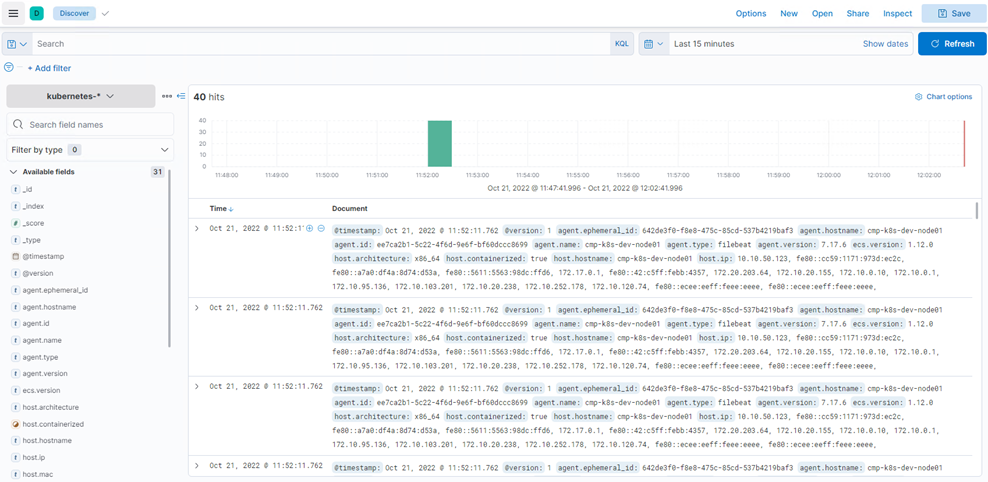

可以看到,所有的资源都是正常运行的。这时候我们打开kibana页面,检查索引是否已经创建成功。

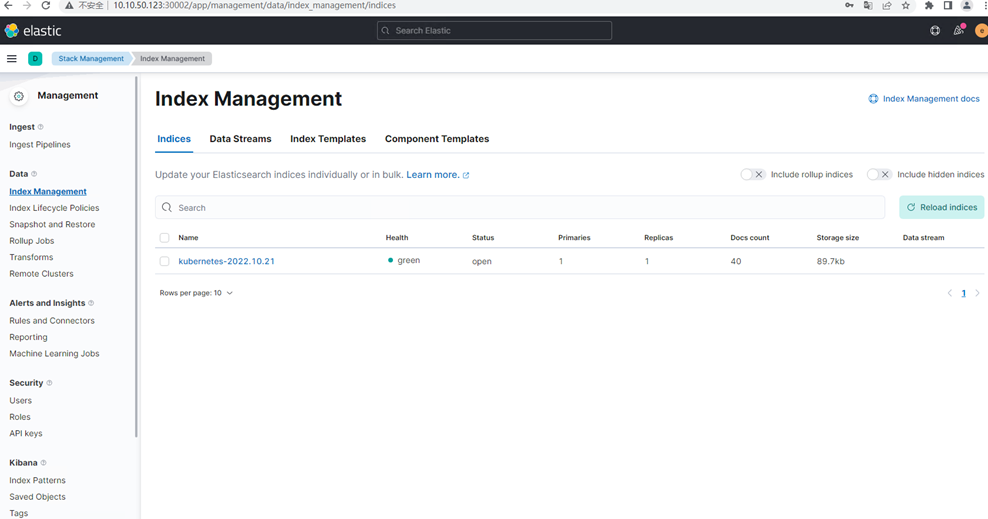

5、访问Kibana

1)浏览器输入http://10.10.50.123:30002/,输入账号和密码登录

2)创建索引模式,查看数据

至此,K8s部署ELK集群完毕。

若文章图片、下载链接等信息出错,请在评论区留言反馈,博主将第一时间更新!如本文“对您有用”,欢迎随意打赏,谢谢!

学习了