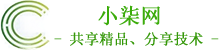

一、Prometheus概述

二、基础概念

数据模型:prometheus将所有数据存储为时间序列:属于相同 metric名称和相同标签组(键值对)的时间戳值流。

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

metric 和 标签:每一个时间序列都是由其 metric名称和一组标签(键值对)组成唯一标识,标签给prometheus建立了多维度数据模型。

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

实例与任务:在prometheus中,一个可以拉取数据的端点叫做实例(instance),一般等同于一个进程。一组有着同样目标的实例(例如为弹性或可用性而复制的进程副本)叫做任务(job)。

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

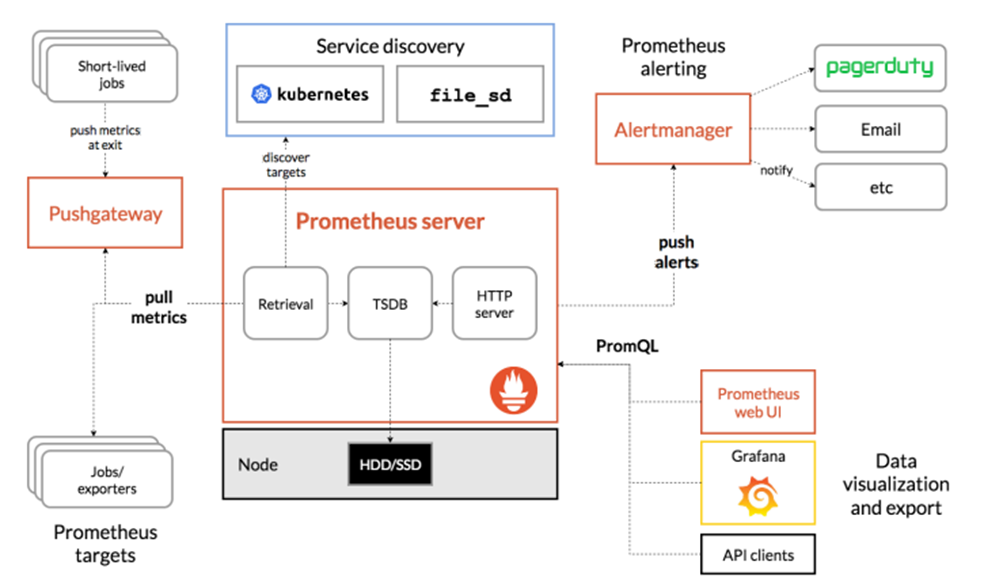

三、组件架构

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

- prometheus server

Retrieval负责在活跃的target主机上抓取监控指标数据。Storage存储主要是把采集到的数据存储到磁盘中。PromQL是Prometheus提供的查询语言模块。

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

- Exporters

prometheus支持多种exporter,通过exporter可以采集metrics数据,然后发送到prometheus server端,所有向promtheus server提供监控数据的程序都可以被称为exporter。

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

- Client Library

客户端库,检测应用程序代码,当 Prometheus 抓取实例的HTTP端点时,客户端库会将所有跟踪的metrics指标的当前状态发送到prometheus server端。

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

- Alertmanager

从 Prometheus server端接收到alerts后,会进行去重,分组,并路由到相应的接收方,发出报警,常见的接收方式有:电子邮件,微信,钉钉, slack 等。

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

- Grafana

监控仪表盘,可视化监控数据。

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

- pushgateway

各个目标主机可上报数据到pushgatewy,然后prometheus server统一从 pushgateway拉取数据。

文章源自小柒网-https://www.yangxingzhen.cn/8352.html

注:基于v1.25.2版Kubernetes,其他版本根据情况修改资源版本及配置

四、安装NFS

1、安装NFS

[root@k8s-master ~]# yum -y install rpcbind nfs-utils

2、创建共享目录

[root@k8s-master ~]# mkdir -p /data/{prometheus,grafana,alertmanager}

[root@k8s-master ~]# chmod 777 /data/{prometheus,grafana,alertmanager}

3、配置exports

[root@k8s-master ~]# cat >> /etc/exports <<EOF

/data/prometheus 10.10.50.0/24(rw,no_root_squash,no_all_squash,sync)

/data/grafana 10.10.50.0/24(rw,no_root_squash,no_all_squash,sync)

/data/alertmanager 10.10.50.0/24(rw,no_root_squash,no_all_squash,sync)

EOF

4、启动rpcbind、nfs服务

[root@k8s-master ~]# systemctl start rpcbind

[root@k8s-master ~]# systemctl start nfs

[root@k8s-master ~]# systemctl enable rpcbind

[root@k8s-master ~]# systemctl enable nfs

5、查看共享目录

[root@k8s-master ~]# showmount -e 10.10.50.24

Export list for 10.10.50.24:

/data/grafana 10.10.50.0/24

/data/prometheus 10.10.50.0/24

/data/alertmanager 10.10.50.0/24

五、安装Node-exporter

1、创建配置文件目录

[root@k8s-master ~]# mkdir /opt/kube-monitor

[root@k8s-master ~]# cd /opt/kube-monitor

2、创建namespace命名空间

[root@k8s-master kube-monitor]# vim kube-monitor-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kube-monitor

labels:

app: monitor

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-namespace.yaml

namespace/kube-monitor created

3、创建daemonset部署可使每个节点都有一个Pod来采集数据

[root@k8s-master kube-monitor]# vim kube-monitor-node-exporter.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-monitor

labels:

name: node-exporter

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true # 共享宿主机网络和进程

containers:

- name: node-exporter

image: prom/node-exporter:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9100 # 容器暴露端口为9100

resources:

requests:

cpu: 0.15

securityContext:

privileged: true # 开启特权模式

args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts: # 挂载宿主机目录以收集宿主机信息

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations: # 定义容忍度,使其可调度到默认有污点的master

- operator: "Exists"

volumes: # 定义存储卷

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

4、部署node-exporter

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-node-exporter.yaml

daemonset.apps/node-exporter created

5、查看是否部署成功

[root@k8s-master kube-monitor]# kubectl get pod -n kube-monitor

NAME READY STATUS RESTARTS AGE

node-exporter-h2hzl 1/1 Running 0 60s

6、检查node-exporter是否能正常采集到数据

[root@k8s-master kube-monitor]# curl -s http://10.10.50.24:9100/metrics |grep node_load

# HELP node_load1 1m load average.

# TYPE node_load1 gauge

node_load1 0.19

# HELP node_load15 15m load average.

# TYPE node_load15 gauge

node_load15 0.36

# HELP node_load5 5m load average.

# TYPE node_load5 gauge

node_load5 0.3

注:出现以上数据代表node-exporter安装成功

六、安装Prometheus

1、创建sa账号

[root@k8s-master kube-monitor]# kubectl create serviceaccount monitor -n kube-monitor

serviceaccount/monitor created

2、sa账号授权

[root@k8s-master kube-monitor]# kubectl create clusterrolebinding monitor-clusterrolebinding -n kube-monitor --clusterrole=cluster-admin --serviceaccount=monitor:monitor

clusterrolebinding.rbac.authorization.k8s.io/monitor-clusterrolebinding created

3、创建StorageClass

[root@k8s-master kube-monitor]# vim kube-monitor-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-monitor

namespace: kube-monitor

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-storageclass.yaml

storageclass.storage.k8s.io/nfs-monitor created

4、创建Prometheus的configmap

[root@k8s-master kube-monitor]# vim kube-monitor-prometheus-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-monitor

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 10s

evaluation_interval: 1m

scrape_configs:

- job_name: 'kubernetes-node'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

- job_name: 'kubernetes-apiserver'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-prometheus-configmap.yaml

configmap/prometheus-config created

5、创建Prometheus的PV

[root@k8s-master kube-monitor]# vim kube-monitor-prometheus-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-pv

labels:

name: prometheus-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs-monitor

nfs:

path: /data/prometheus

server: 10.10.50.24

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-prometheus-pv.yaml

persistentvolume/prometheus-pv created

6、创建Prometheus的PVC

[root@k8s-master kube-monitor]# vim kube-monitor-prometheus-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus-pvc

namespace: kube-monitor

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs-monitor

resources:

requests:

storage: 10Gi

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-prometheus-pvc.yaml

persistentvolumeclaim/prometheus-pvc created

7、创建Prometheus的deployment

[root@k8s-master kube-monitor]# vim kube-monitor-prometheus-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-server

namespace: kube-monitor

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

component: server

template:

metadata:

labels:

app: prometheus

component: server

annotations:

prometheus.io/scrape: 'false' # 该容器不会被prometheus发现并监控,其他pod可通过添加该注解(值为true)以服务发现的方式自动被prometheus监控到。

spec:

serviceAccountName: monitor # 指定sa,使容器有权限获取数据

containers:

- name: prometheus # 容器名称

image: prom/prometheus:v2.27.1 # 镜像名称

imagePullPolicy: IfNotPresent # 镜像拉取策略

command: # 容器启动时执行的命令

- prometheus

- --config.file=/etc/prometheus/prometheus.yml

- --storage.tsdb.path=/prometheus # 旧数据存储目录

- --storage.tsdb.retention=720h # 旧数据保留时间

- --web.enable-lifecycle # 开启热加载

ports: # 容器暴露的端口

- containerPort: 9090

protocol: TCP # 协议

volumeMounts: # 容器挂载的数据卷

- mountPath: /etc/prometheus # 要挂载到哪里

name: prometheus-config # 挂载谁(与下面定义的volume对应)

- mountPath: /prometheus/

name: prometheus-data

volumes: # 数据卷定义

- name: prometheus-config # 名称

configMap: # 从configmap获取数据

name: prometheus-config # configmap的名称

- name: prometheus-data

persistentVolumeClaim:

claimName: prometheus-pvc

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-prometheus-deploy.yaml

deployment.apps/prometheus-server created

8、查看deployment是否部署成功

[root@k8s-master kube-monitor]# kubectl get deployment -n kube-monitor

[root@k8s-master kube-monitor]# kubectl get pod -n kube-monitor

NAME READY STATUS RESTARTS AGE

node-exporter-h2hzl 1/1 Running 0 25m

prometheus-server-5878b54764-vjnmf 1/1 Running 0 60s

9、创建Prometheus的Service

[root@k8s-master kube-monitor]# vim kube-monitor-prometheus-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: kube-monitor

labels:

app: prometheus

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30001

protocol: TCP

selector:

app: prometheus

component: server

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-prometheus-svc.yaml

service/prometheus created

10、查看Service

[root@k8s-master kube-monitor]# kubectl get svc -n kube-monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus NodePort 172.15.225.209 <none> 9090:30001/TCP 51s

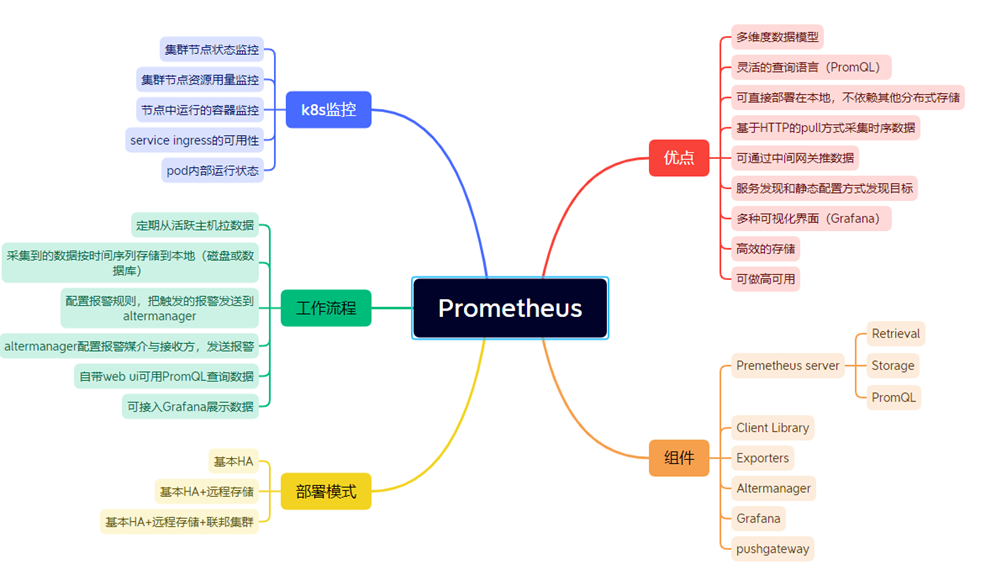

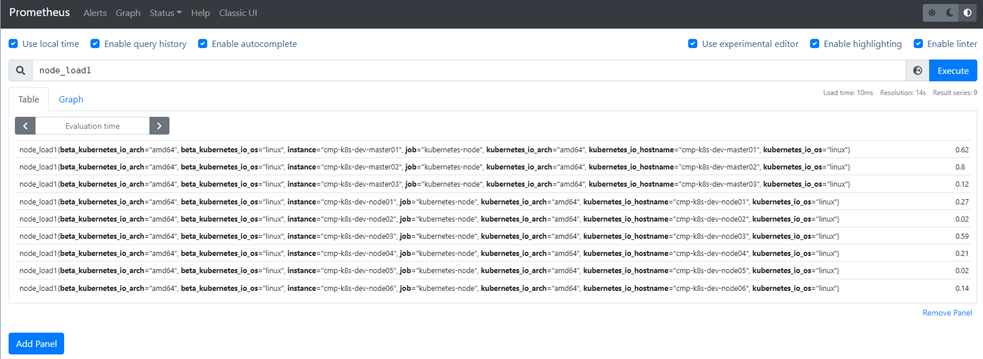

11、浏览器访问Prometheus的web界面

# 输入http://10.10.50.24:30001,如下图

12、查询负载情况验证Prometheus Server是否正常运行

七、安装Grafana

1、创建Grafana的PV

[root@k8s-master kube-monitor]# vim kube-monitor-grafana-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-pv

labels:

name: grafana-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs-monitor

nfs:

path: /data/grafana

server: 10.10.50.24

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-grafana-pv.yaml

persistentvolume/grafana-pv created

2、创建Grafana的PVC

[root@k8s-master kube-monitor]# vim kube-monitor-grafana-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

namespace: kube-monitor

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs-monitor

resources:

requests:

storage: 10Gi

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-grafana-pvc.yaml

persistentvolumeclaim/grafana-pvc created

3、创建Grafana的deployment配置文件,配置内容如下

[root@k8s-master kube-monitor]# vim kube-monitor-grafana-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana-server

namespace: kube-monitor

labels:

app: grafana

spec:

replicas: 1

selector:

matchLabels:

app: grafana

component: server

template:

metadata:

labels:

app: grafana

component: server

spec:

containers:

- name: grafana

image: grafana/grafana:8.5.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

- mountPath: /var/lib/grafana/

name: grafana-data

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

- name: grafana-data

persistentVolumeClaim:

claimName: grafana-pvc

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-grafana-deploy.yaml

deployment.apps/grafana-server created

4、查看Grafana是否创建成功

[root@k8s-master kube-monitor]# kubectl get deployment,pod -n kube-monitor

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/grafana-server 1/1 1 1 53s

deployment.apps/prometheus-server 1/1 1 1 21m

NAME READY STATUS RESTARTS AGE

pod/grafana-server-847d9d6794-h6ddd 1/1 Running 0 53s

pod/node-exporter-h2hzl 1/1 Running 0 45m

pod/prometheus-server-5878b54764-vjnmf 1/1 Running 0 21m

5、创建Grafana的Service

[root@k8s-master kube-monitor]# vim kube-monitor-grafana-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitor-grafana

name: grafana

namespace: kube-monitor

spec:

ports:

- name: grafana

port: 3000

targetPort: 3000

nodePort: 30002

selector:

app: grafana

type: NodePort

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-grafana-svc.yaml

6、查看Service

[root@k8s-master kube-monitor]# kubectl get svc -n kube-monitor

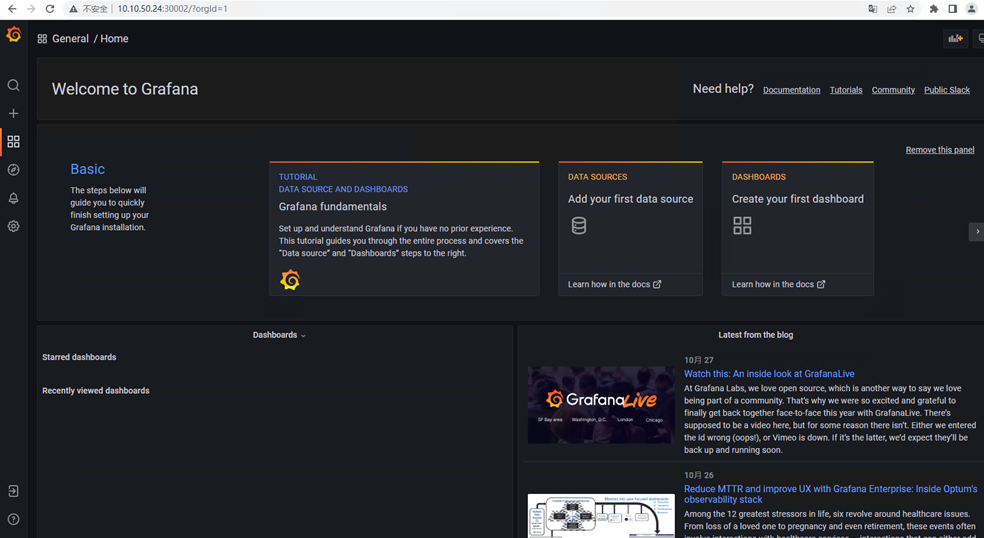

7、浏览器访问Grafana

# 输入http://10.10.50.24:30002,如下图

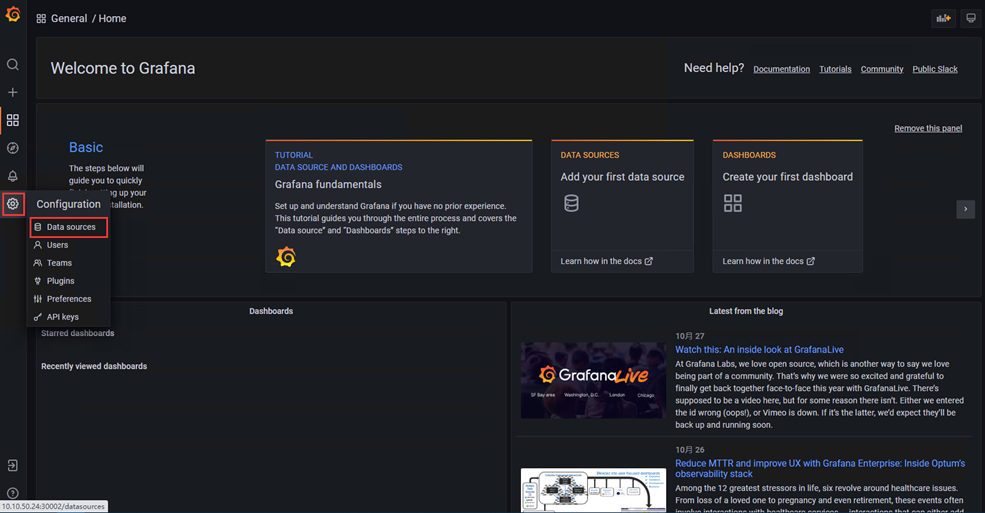

八、配置Grafana

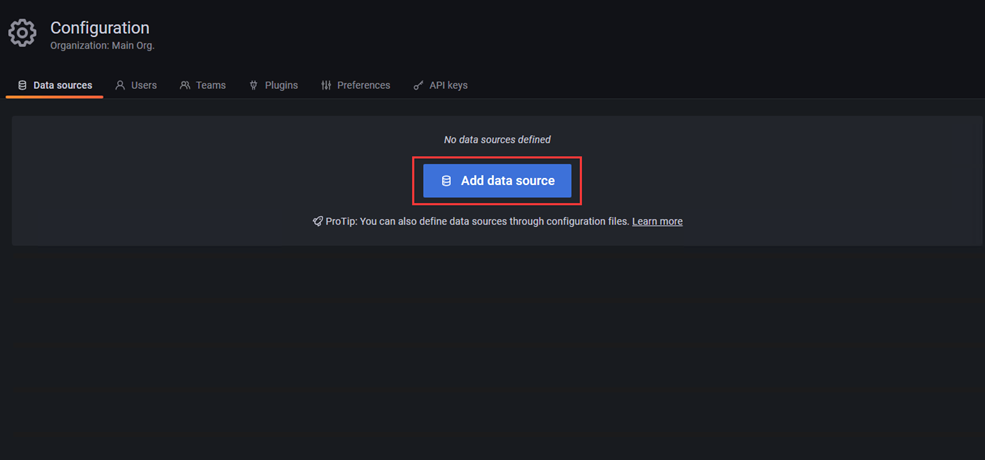

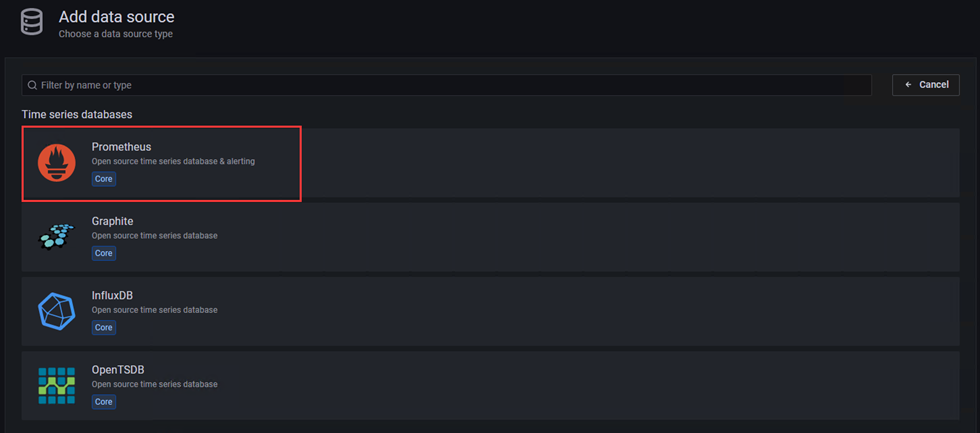

1、添加Prometheus数据源

2、数据源选择Prometheus,进入配置界面

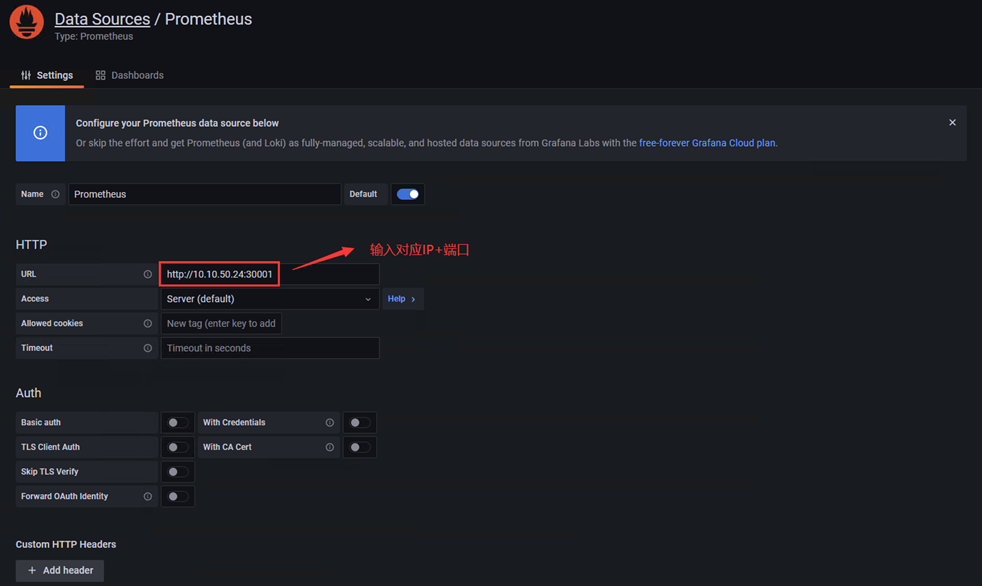

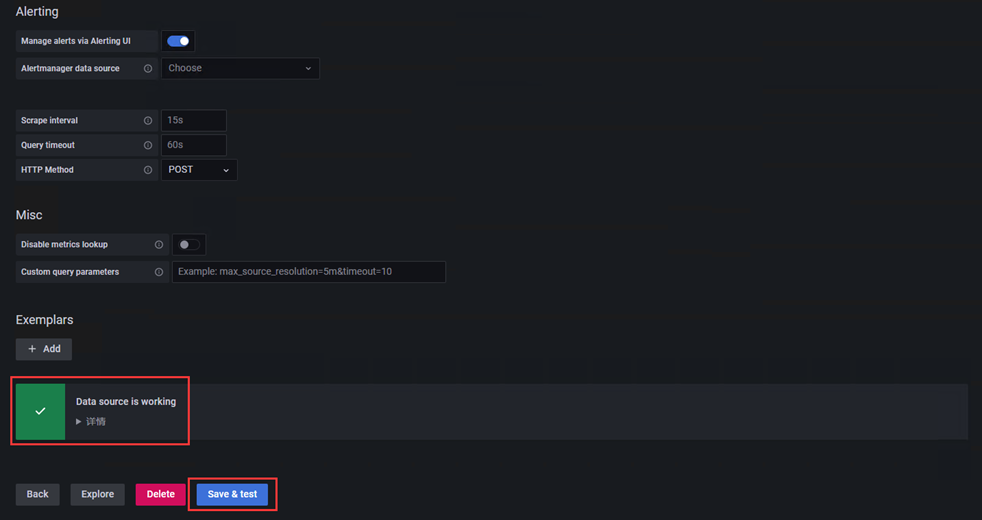

3、配置内容如下

# 配置完成点击左下角Save & Test,出现如Data source is working,说明Prometheus数据源配置成功。

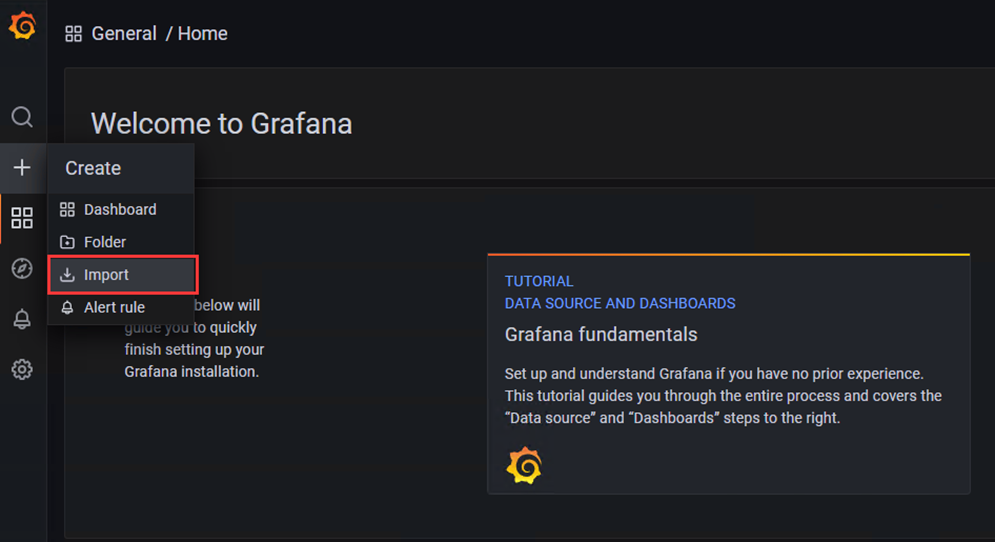

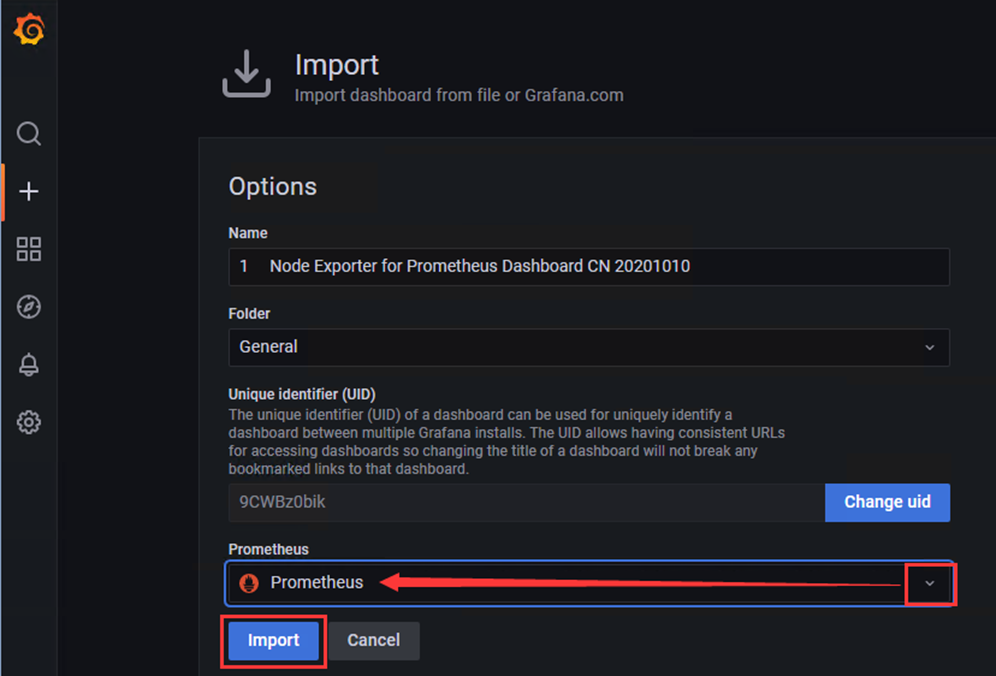

4、下载模板

模板地址:https://grafana.com/grafana/dashboards/16098-1-node-exporter-for-prometheus-dashboard-cn-0417-job/

1)点击'+'选项,点击Import

2)选择下载好的json模板文件,点击Import导入

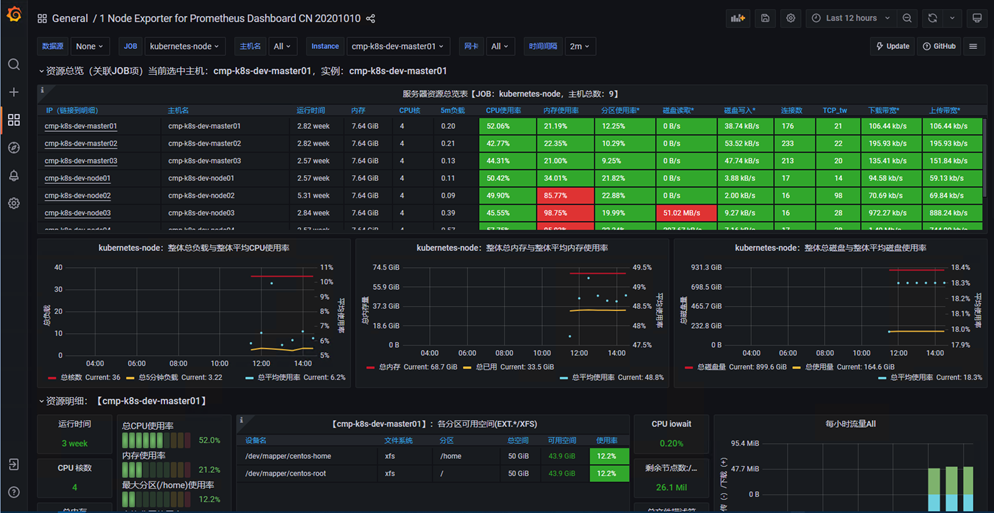

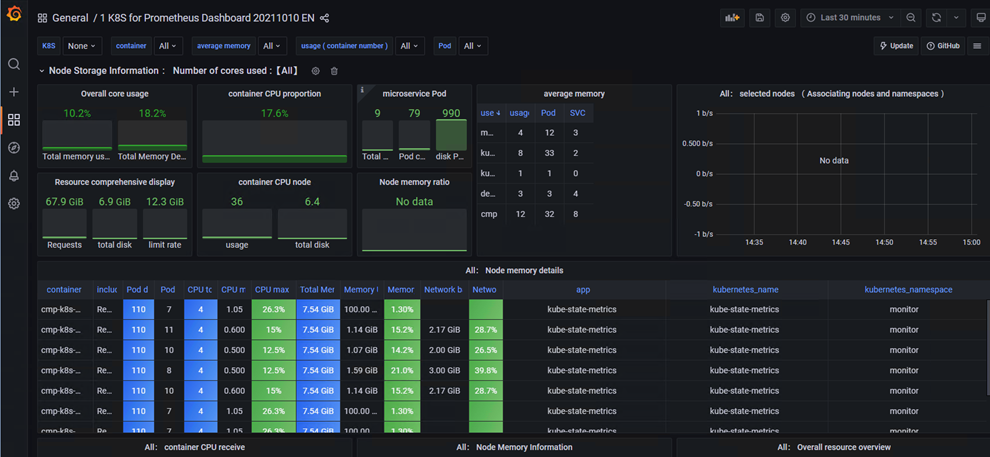

3)查看模板详情

九、安装kube-state-metrics(监控k8s资源状态)

1、创建rbac授权,配置内容如下

[root@k8s-master kube-monitor]# vim kube-state-metrics-rbac.yaml

---

apiVersion: v1 # api版本:v1

kind: ServiceAccount # 资源类型:服务账号

metadata: # 元数据

name: kube-state-metrics # 名称

namespace: kube-monitor # 名称空间

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole # 资源类型:集群角色

metadata:

name: kube-state-metrics

rules:

- apiGroups: [""]

resources: ["nodes", "pods", "services", "resourcequotas", "replicationcontrollers", "limitranges", "persistentvolumeclaims", "persistentvolumes", "namespaces", "endpoints"]

verbs: ["list", "watch"]

- apiGroups: ["extensions"]

resources: ["daemonsets", "deployments", "replicasets"]

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources: ["statefulsets"]

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources: ["cronjobs", "jobs"]

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources: ["horizontalpodautoscalers"]

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-monitor

[root@k8s-master kube-monitor]# kubectl apply -f kube-state-metrics-rbac.yaml

serviceaccount/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

2、使用deployment安装kube-state-metrics,配置内容如下

[root@k8s-master kube-monitor]# vim kube-state-metrics-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: kube-monitor

spec:

replicas: 1

selector:

matchLabels:

app: kube-state-metrics

template:

metadata:

labels:

app: kube-state-metrics

spec:

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: quay.io/coreos/kube-state-metrics:v1.9.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

[root@k8s-master kube-monitor]# kubectl apply -f kube-state-metrics-deploy.yaml

deployment.apps/kube-state-metrics created

3、查看是否创建成功

[root@k8s-master kube-monitor]# kubectl get deployment,pod -n kube-monitor

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/grafana-server 1/1 1 1 13h

deployment.apps/kube-state-metrics 1/1 1 1 44s

deployment.apps/prometheus-server 1/1 1 1 14h

NAME READY STATUS RESTARTS AGE

pod/grafana-server-847d9d6794-h6ddd 1/1 Running 0 13h

pod/kube-state-metrics-7594ddfc96-js97z 1/1 Running 0 44s

pod/node-exporter-h2hzl 1/1 Running 0 14h

4、创建Service,配置文件如下

[root@k8s-master kube-monitor]# vim kube-state-metrics-svc.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: 'true'

name: kube-state-metrics

namespace: kube-monitor

labels:

app: kube-state-metrics

spec:

ports:

- name: kube-state-metrics

port: 8080

protocol: TCP

selector:

app: kube-state-metrics

[root@k8s-master kube-monitor]# kubectl apply -f kube-state-metrics-svc.yaml

service/kube-state-metrics created

5、查看Service

[root@k8s-master kube-monitor]# kubectl get svc -n kube-monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana NodePort 172.15.244.177 <none> 3000:30002/TCP 13h

kube-state-metrics ClusterIP 172.15.163.99 <none> 8080/TCP 38s

prometheus NodePort 172.15.225.209 <none> 9090:30001/TCP 13h

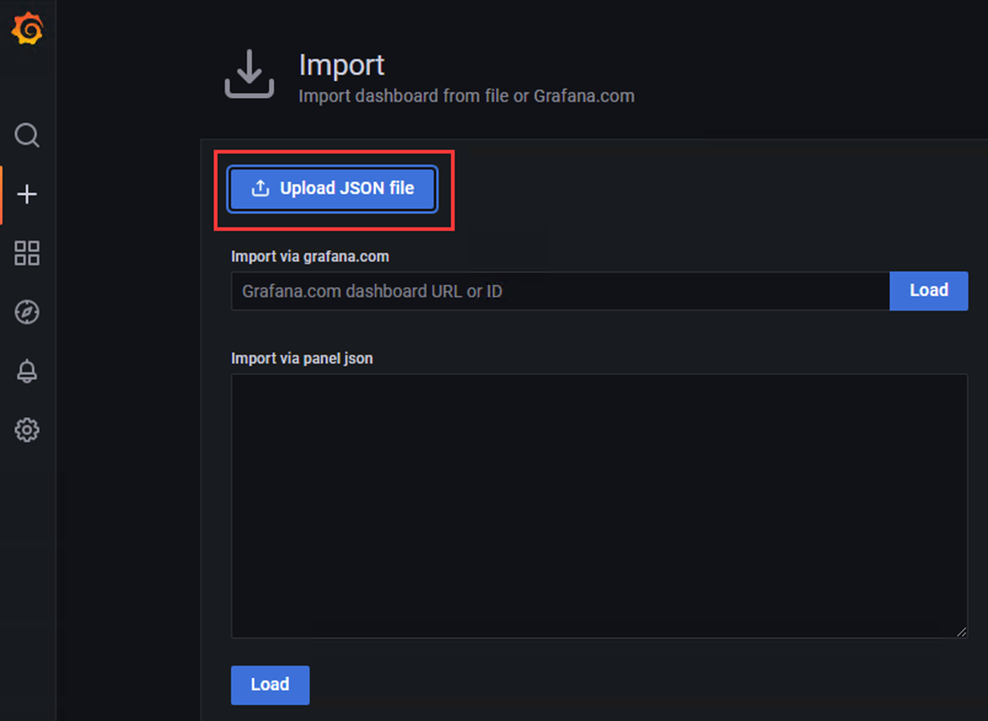

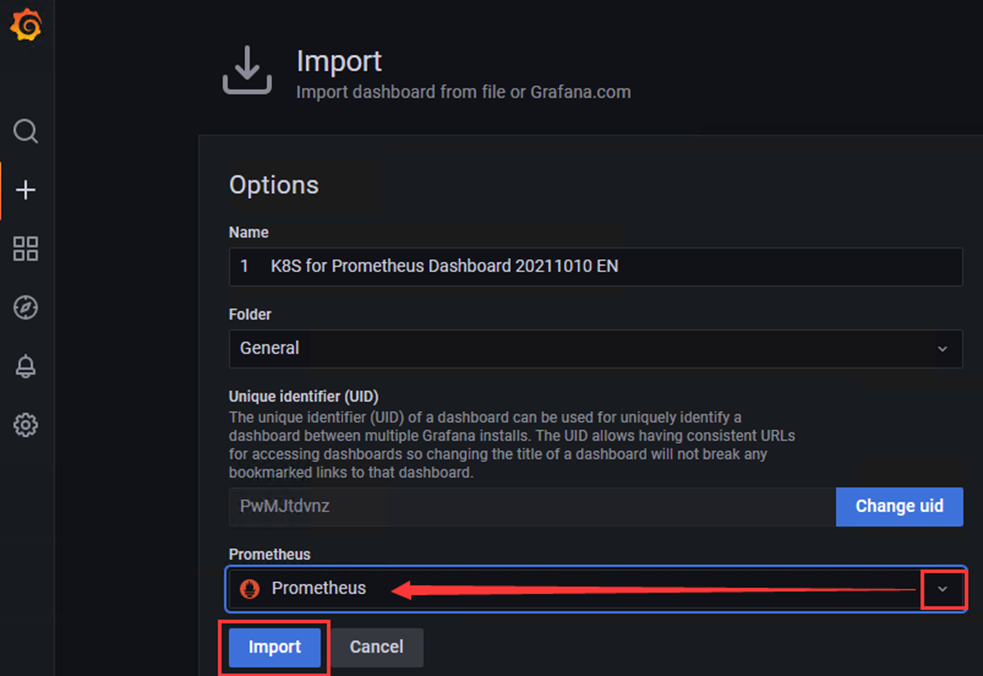

6、导入模板

模板地址:https://grafana.com/grafana/dashboards/15661-1-k8s-for-prometheus-dashboard-20211010/

# 导入json模板文件

# 查看监控状态

十、安装Altermanager

1、创建altermanager配置和告警模板

[root@k8s-master kube-monitor]# vim kube-monitor-alertmanager-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: alertmanager-config

namespace: kube-monitor

data:

alertmanager.yml: | # altermanager配置文件

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.qq.com:465' # 发送者的SMTP服务器

smtp_from: '675583110@qq.com' # 发送者的邮箱

smtp_auth_username: '675583110@qq.com' # 发送者的邮箱用户名

smtp_auth_password: 'Aa123456' # 发送者授权密码

smtp_require_tls: false

templates:

- '/etc/alertmanager/email.tmpl'

route: # 配置告警分发策略

group_by: ['alertname'] # 采用哪个标签作为分组依据

group_wait: 10s # 组告警等待时间(10s内的同组告警一起发送)

group_interval: 10s # 两组告警的间隔时间

repeat_interval: 10m # 重复告警的间隔时间

receiver: email # 接收者配置

receivers:

- name: 'email' # 接收者名称(与上面对应)

email_configs: # 接收邮箱配置

- to: '675583110@qq.com' # 接收邮箱(填要接收告警的邮箱)

headers: { Subject: "Prometheus [Warning] 报警邮件" }

html: '{{ template "email.html" . }}'

send_resolved: true # 是否通知已解决的告警

email.tmpl: | # 告警模版

{{ define "email.html" }}

{{- if gt (len .Alerts.Firing) 0 -}}

{{ range .Alerts }}

告警程序:Prometheus_Alertmanager <br>

告警级别:{{ .Labels.severity }} <br>

告警状态:{{ .Status }} <br>

告警类型:{{ .Labels.alertname }} <br>

告警主机:{{ .Labels.instance }} <br>

告警详情:{{ .Annotations.description }} <br>

触发时间:{{ (.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }} <br>

{{ end }}{{ end -}}

{{- if gt (len .Alerts.Resolved) 0 -}}

{{ range .Alerts }}

告警程序:Prometheus_Alertmanager <br>

告警级别:{{ .Labels.severity }} <br>

告警状态:{{ .Status }} <br>

告警类型:{{ .Labels.alertname }} <br>

告警主机:{{ .Labels.instance }} <br>

告警详情:{{ .Annotations.description }} <br>

触发时间:{{ (.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }} <br>

恢复时间:{{ (.EndsAt.Add 28800e9).Format "2006-01-02 15:04:05" }} <br>

{{ end }}{{ end -}}

{{- end }}

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-alertmanager-configmap.yaml

configmap/alertmanager-config created

2、创建Prometheus告警规则

[root@k8s-master kube-monitor]# rm -rf kube-monitor-prometheus-configmap.yaml

[root@k8s-master kube-monitor]# vim kube-monitor-prometheus-alertmanager-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: prometheus

name: prometheus-config

namespace: kube-monitor

data:

prometheus.yml: |

rule_files:

- /etc/prometheus/rules.yml

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager:9093"]

global:

scrape_interval: 15s

scrape_timeout: 10s

evaluation_interval: 1m

scrape_configs:

- job_name: 'kubernetes-node'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubernetes-node-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-apiserver'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

- job_name: 'kubernetes-schedule'

scrape_interval: 5s

static_configs:

- targets: ['10.10.50.24:10259']

- job_name: 'kubernetes-controller-manager'

scrape_interval: 5s

static_configs:

- targets: ['10.10.50.24:10257']

- job_name: 'kubernetes-kube-proxy'

scrape_interval: 5s

static_configs:

- targets: ['10.10.50.24:10249']

- job_name: 'kubernetes-etcd'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/k8s-certs/etcd/ca.crt

cert_file: /var/run/secrets/kubernetes.io/k8s-certs/etcd/server.crt

key_file: /var/run/secrets/kubernetes.io/k8s-certs/etcd/server.key

scrape_interval: 5s

static_configs:

- targets: ['10.10.50.24:2379']

rules.yml: |

groups:

- name: example

rules:

- alert: kube-proxy的cpu使用率大于80%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-kube-proxy"}[1m]) * 100 > 80

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%"

- alert: kube-proxy的cpu使用率大于90%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-kube-proxy"}[1m]) * 100 > 90

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%"

- alert: scheduler的cpu使用率大于80%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-schedule"}[1m]) * 100 > 80

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%"

- alert: scheduler的cpu使用率大于90%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-schedule"}[1m]) * 100 > 90

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%"

- alert: controller-manager的cpu使用率大于80%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-controller-manager"}[1m]) * 100 > 80

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%"

- alert: controller-manager的cpu使用率大于90%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-controller-manager"}[1m]) * 100 > 0

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%"

- alert: apiserver的cpu使用率大于80%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-apiserver"}[1m]) * 100 > 80

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%"

- alert: apiserver的cpu使用率大于90%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-apiserver"}[1m]) * 100 > 90

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%"

- alert: etcd的cpu使用率大于80%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-etcd"}[1m]) * 100 > 80

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%"

- alert: etcd的cpu使用率大于90%

expr: rate(process_cpu_seconds_total{job=~"kubernetes-etcd"}[1m]) * 100 > 90

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%"

- alert: kube-state-metrics的cpu使用率大于80%

expr: rate(process_cpu_seconds_total{k8s_app=~"kube-state-metrics"}[1m]) * 100 > 80

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.k8s_app}}组件的cpu使用率超过80%"

value: "{{ $value }}%"

threshold: "80%"

- alert: kube-state-metrics的cpu使用率大于90%

expr: rate(process_cpu_seconds_total{k8s_app=~"kube-state-metrics"}[1m]) * 100 > 0

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.k8s_app}}组件的cpu使用率超过90%"

value: "{{ $value }}%"

threshold: "90%"

- alert: coredns的cpu使用率大于80%

expr: rate(process_cpu_seconds_total{k8s_app=~"kube-dns"}[1m]) * 100 > 80

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.k8s_app}}组件的cpu使用率超过80%"

value: "{{ $value }}%"

threshold: "80%"

- alert: coredns的cpu使用率大于90%

expr: rate(process_cpu_seconds_total{k8s_app=~"kube-dns"}[1m]) * 100 > 90

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.k8s_app}}组件的cpu使用率超过90%"

value: "{{ $value }}%"

threshold: "90%"

- alert: kube-proxy打开句柄数>600

expr: process_open_fds{job=~"kubernetes-kube-proxy"} > 600

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600"

value: "{{ $value }}"

- alert: kube-proxy打开句柄数>1000

expr: process_open_fds{job=~"kubernetes-kube-proxy"} > 1000

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000"

value: "{{ $value }}"

- alert: kubernetes-schedule打开句柄数>600

expr: process_open_fds{job=~"kubernetes-schedule"} > 600

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600"

value: "{{ $value }}"

- alert: kubernetes-schedule打开句柄数>1000

expr: process_open_fds{job=~"kubernetes-schedule"} > 1000

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000"

value: "{{ $value }}"

- alert: kubernetes-controller-manager打开句柄数>600

expr: process_open_fds{job=~"kubernetes-controller-manager"} > 600

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600"

value: "{{ $value }}"

- alert: kubernetes-controller-manager打开句柄数>1000

expr: process_open_fds{job=~"kubernetes-controller-manager"} > 1000

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000"

value: "{{ $value }}"

- alert: kubernetes-apiserver打开句柄数>600

expr: process_open_fds{job=~"kubernetes-apiserver"} > 600

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600"

value: "{{ $value }}"

- alert: kubernetes-apiserver打开句柄数>1000

expr: process_open_fds{job=~"kubernetes-apiserver"} > 1000

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000"

value: "{{ $value }}"

- alert: kubernetes-etcd打开句柄数>600

expr: process_open_fds{job=~"kubernetes-etcd"} > 600

for: 2s

labels:

severity: warnning

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600"

value: "{{ $value }}"

- alert: kubernetes-etcd打开句柄数>1000

expr: process_open_fds{job=~"kubernetes-etcd"} > 1000

for: 2s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000"

value: "{{ $value }}"

- alert: coredns

expr: process_open_fds{k8s_app=~"kube-dns"} > 600

for: 2s

labels:

severity: warnning

annotations:

description: "插件{{$labels.k8s_app}}({{$labels.instance}}): 打开句柄数超过600"

value: "{{ $value }}"

- alert: coredns

expr: process_open_fds{k8s_app=~"kube-dns"} > 1000

for: 2s

labels:

severity: critical

annotations:

description: "插件{{$labels.k8s_app}}({{$labels.instance}}): 打开句柄数超过1000"

value: "{{ $value }}"

- alert: kube-proxy

expr: process_virtual_memory_bytes{job=~"kubernetes-kube-proxy"} > 2000000000

for: 2s

labels:

severity: warnning

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}): 使用虚拟内存超过2G"

value: "{{ $value }}"

- alert: scheduler

expr: process_virtual_memory_bytes{job=~"kubernetes-schedule"} > 2000000000

for: 2s

labels:

severity: warnning

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}): 使用虚拟内存超过2G"

value: "{{ $value }}"

- alert: kubernetes-controller-manager

expr: process_virtual_memory_bytes{job=~"kubernetes-controller-manager"} > 2000000000

for: 2s

labels:

severity: warnning

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}): 使用虚拟内存超过2G"

value: "{{ $value }}"

- alert: kubernetes-apiserver

expr: process_virtual_memory_bytes{job=~"kubernetes-apiserver"} > 2000000000

for: 2s

labels:

severity: warnning

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}): 使用虚拟内存超过2G"

value: "{{ $value }}"

- alert: kubernetes-etcd

expr: process_virtual_memory_bytes{job=~"kubernetes-etcd"} > 2000000000

for: 2s

labels:

severity: warnning

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}): 使用虚拟内存超过2G"

value: "{{ $value }}"

- alert: kube-dns

expr: process_virtual_memory_bytes{k8s_app=~"kube-dns"} > 2000000000

for: 2s

labels:

severity: warnning

annotations:

description: "插件{{$labels.k8s_app}}({{$labels.instance}}): 使用虚拟内存超过2G"

value: "{{ $value }}"

- alert: HttpRequestsAvg

expr: sum(rate(rest_client_requests_total{job=~"kubernetes-kube-proxy|kubernetes-kubelet|kubernetes-schedule|kubernetes-control-manager|kubernetes-apiservers"}[1m])) > 1000

for: 2s

labels:

team: admin

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}): TPS超过1000"

value: "{{ $value }}"

threshold: "1000"

- alert: Pod_restarts

expr: kube_pod_container_status_restarts_total{namespace=~"kube-system|default|monitor"} > 0

for: 2s

labels:

severity: warnning

annotations:

description: "在{{$labels.namespace}}名称空间下发现{{$labels.pod}}这个pod下的容器{{$labels.container}}被重启,这个监控指标是由{{$labels.instance}}采集的"

value: "{{ $value }}"

threshold: "0"

- alert: Pod_waiting

expr: kube_pod_container_status_waiting_reason{namespace=~"kube-system|default"} == 1

for: 2s

labels:

team: admin

annotations:

description: "空间{{$labels.namespace}}({{$labels.instance}}): 发现{{$labels.pod}}下的{{$labels.container}}启动异常等待中"

value: "{{ $value }}"

threshold: "1"

- alert: Pod_terminated

expr: kube_pod_container_status_terminated_reason{namespace=~"kube-system|default|monitor"} == 1

for: 2s

labels:

team: admin

annotations:

description: "空间{{$labels.namespace}}({{$labels.instance}}): 发现{{$labels.pod}}下的{{$labels.container}}被删除"

value: "{{ $value }}"

threshold: "1"

- alert: Etcd_leader

expr: etcd_server_has_leader{job="kubernetes-etcd"} == 0

for: 2s

labels:

team: admin

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}): 当前没有leader"

value: "{{ $value }}"

threshold: "0"

- alert: Etcd_leader_changes

expr: rate(etcd_server_leader_changes_seen_total{job="kubernetes-etcd"}[1m]) > 0

for: 2s

labels:

team: admin

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}): 当前leader已发生改变"

value: "{{ $value }}"

threshold: "0"

- alert: Etcd_failed

expr: rate(etcd_server_proposals_failed_total{job="kubernetes-etcd"}[1m]) > 0

for: 2s

labels:

team: admin

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}): 服务失败"

value: "{{ $value }}"

threshold: "0"

- alert: Etcd_db_total_size

expr: etcd_debugging_mvcc_db_total_size_in_bytes{job="kubernetes-etcd"} > 10000000000

for: 2s

labels:

team: admin

annotations:

description: "组件{{$labels.job}}({{$labels.instance}}):db空间超过10G"

value: "{{ $value }}"

threshold: "10G"

- alert: Endpoint_ready

expr: kube_endpoint_address_not_ready{namespace=~"kube-system|default"} == 1

for: 2s

labels:

team: admin

annotations:

description: "空间{{$labels.namespace}}({{$labels.instance}}): 发现{{$labels.endpoint}}不可用"

value: "{{ $value }}"

threshold: "1"

- name: Node_exporter Down

rules:

- alert: Node实例已宕机

expr: up{job != "kubernetes-apiserver",job != "kubernetes-controller-manager",job != "kubernetes-etcd",job != "kubernetes-kube-proxy",job != "kubernetes-schedule",job != "kubernetes-service-endpoints"} == 0

for: 15s

labels:

severity: Emergency

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "Node_exporter客户端已停止运行超过15s"

- name: Memory Usage High

rules:

- alert: 物理节点-内存使用率过高

expr: 100 - (node_memory_Buffers_bytes + node_memory_Cached_bytes + node_memory_MemFree_bytes) / node_memory_MemTotal_bytes * 100 > 90

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "内存使用率 > 90%,当前内存使用率:{{ $value }}"

- name: Cpu Load 1

rules:

- alert: 物理节点-CPU(1分钟负载)

expr: node_load1 > 9

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "CPU负载(1分钟) > 9,前CPU负载(1分钟):{{ $value }}"

- name: Cpu Load 5

rules:

- alert: 物理节点-CPU(5分钟负载)

expr: node_load5 > 10

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "CPU负载(5分钟) > 10,当前CPU负载(5分钟):{{ $value }}"

- name: Cpu Load 15

rules:

- alert: 物理节点-CPU(15分钟负载)

expr: node_load15 > 11

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "CPU负载(15分钟) > 11,当前CPU负载(15分钟):{{ $value }}"

- name: Cpu Idle High

rules:

- alert: 物理节点-CPU使用率过高

expr: 100 - (avg by(job,instance) (irate(node_cpu_seconds_total{mode="idle"}[5m])) * 100) > 90

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "CPU使用率 > 90%,当前CPU使用率:{{ $value }}"

- name: Root Disk Space

rules:

- alert: 物理节点-根分区使用率

expr: (node_filesystem_size_bytes {mountpoint ="/"} - node_filesystem_free_bytes {mountpoint ="/"}) / node_filesystem_size_bytes {mountpoint ="/"} * 100 > 90

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "根分区使用率 > 90%,当前根分区使用率:{{ $value }}"

- name: opt Disk Space

rules:

- alert: 物理节点-opt分区使用率

expr: (node_filesystem_size_bytes {mountpoint ="/opt"} - node_filesystem_free_bytes {mountpoint ="/opt"}) / node_filesystem_size_bytes {mountpoint ="/opt"} * 100 > 90

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "/opt分区使用率 > 90%,当前/opt分区使用率:{{ $value }}"

- name: SWAP Usage Space

rules:

- alert: 物理节点-Swap分区使用率

expr: (1 - (node_memory_SwapFree_bytes / node_memory_SwapTotal_bytes)) * 100 > 90

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "Swap分区使用率 > 90%,当前Swap分区使用率:{{ $value }}"

- name: Unusual Disk Read Rate

rules:

- alert: 物理节点-磁盘I/O读速率

expr: sum by (job,instance) (irate(node_disk_read_bytes_total[2m])) / 1024 / 1024 > 200

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "磁盘I/O读速率 > 200 MB/s,当前磁盘I/O读速率:{{ $value }}"

- name: Unusual Disk Write Rate

rules:

- alert: 物理节点-磁盘I/O写速率

expr: sum by (job,instance) (irate(node_disk_written_bytes_total[2m])) / 1024 / 1024 > 200

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "磁盘I/O写速率 > 200 MB/s,当前磁盘I/O读速率:{{ $value }}"

- name: UnusualNetworkThroughputOut

rules:

- alert: 物理节点-网络流出带宽

expr: sum by (job,instance) (irate(node_network_transmit_bytes_total[2m])) / 1024 / 1024 > 200

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "网络流出带宽 > 200 MB/s,当前网络流出带宽{{ $value }}"

- name: TCP_Established_High

rules:

- alert: 物理节点-TCP连接数

expr: node_netstat_Tcp_CurrEstab > 5000

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "TCP连接数 > 5000,当前TCP连接数:[{{ $value }}]"

- name: TCP_TIME_WAIT

rules:

- alert: 物理节点-等待关闭的TCP连接数

expr: node_sockstat_TCP_tw > 5000

for: 3m

labels:

severity: Warning

annotations:

summary: "{{ $labels.job }}"

address: "{{ $labels.instance }}"

description: "等待关闭的TCP连接数 > 5000,当前等待关闭的TCP连接数:[{{ $value }}]"

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-prometheus-alertmanager-configmap.yaml

configmap/prometheus-config configured

3、创建Alertmanager的PV

[root@k8s-master kube-monitor]# vim kube-monitor-alertmanager-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: alertmanager-pv

labels:

name: alertmanager-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs-monitor

nfs:

path: /data/alertmanager

server: 10.10.50.24

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-alertmanager-pv.yaml

persistentvolume/alertmanager-pv created

4、创建Alertmanager的PVC

[root@k8s-master kube-monitor]# vim kube-monitor-alertmanager-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: alertmanager-pvc

namespace: kube-monitor

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs-monitor

resources:

requests:

storage: 10Gi

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-alertmanager-pvc.yaml

persistentvolumeclaim/alertmanager-pvc created

5、创建Altermanager的yaml文件,配置内容如下

[root@k8s-master kube-monitor]# vim kube-monitor-alertmanager-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: kube-monitor

labels:

app: alertmanager

spec:

replicas: 1

selector:

matchLabels:

app: alertmanager

component: server

template:

metadata:

labels:

app: alertmanager

component: server

spec:

serviceAccountName: monitor # 指定sa,使容器有权限获取数据

containers:

- name: alertmanager # 容器名称

image: bitnami/alertmanager:0.24.0 # 镜像名称

imagePullPolicy: IfNotPresent # 镜像拉取策略

args: # 容器启动时执行的命令

- "--config.file=/etc/alertmanager/alertmanager.yml"

- "--log.level=debug"

ports: # 容器暴露的端口

- containerPort: 9093

protocol: TCP # 协议

name: alertmanager

volumeMounts: # 容器挂载的数据卷

- mountPath: /etc/alertmanager # 要挂载到哪里

name: alertmanager-config # 挂载谁(与下面定义的volume对应)

- mountPath: /alertmanager/

name: alertmanager-data

- name: localtime

mountPath: /etc/localtime

volumes: # 数据卷定义

- name: alertmanager-config # 名称

configMap: # 从configmap获取数据

name: alertmanager-config # configmap的名称

- name: alertmanager-data

persistentVolumeClaim:

claimName: alertmanager-pvc

- name: localtime

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-alertmanager-deploy.yaml

deployment.apps/alertmanager created

6、创建Service,配置内容如下

[root@k8s-master kube-monitor]# vim kube-monitor-alertmanager-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

name: alertmanager

kubernetes.io/cluster-service: 'true'

name: alertmanager

namespace: kube-monitor

spec:

ports:

- name: alertmanager

nodePort: 30003

port: 9093

protocol: TCP

targetPort: 9093

selector:

app: alertmanager

sessionAffinity: None

type: NodePort

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-alertmanager-svc.yaml

service/alertmanager created

7、生成etcd-certs用于Prometheus

[root@k8s-master kube-monitor]# kubectl -n kube-monitor create secret generic etcd-certs --from-file=/etc/kubernetes/pki/etcd/server.key --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/ca.crt

secret/etcd-certs created

8、删除之前安装的Prometheus

[root@k8s-master kube-monitor]# kubectl delete -f kube-monitor-prometheus-deploy.yaml

deployment.apps "prometheus-server" deleted

9、修改prometheus-deploy.yaml

[root@k8s-master kube-monitor]# vim kube-monitor-prometheus-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-server

namespace: kube-monitor

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

component: server

template:

metadata:

labels:

app: prometheus

component: server

annotations:

prometheus.io/scrape: 'false' # 该容器不会被prometheus发现并监控,其他pod可通过添加该注解(值为true)以服务发现的方式自动被prometheus监控到。

spec:

serviceAccountName: monitor # 指定sa,使容器有权限获取数据

containers:

- name: prometheus # 容器名称

image: prom/prometheus:v2.27.1 # 镜像名称

imagePullPolicy: IfNotPresent # 镜像拉取策略

command: # 容器启动时执行的命令

- prometheus

- --config.file=/etc/prometheus/prometheus.yml

- --storage.tsdb.path=/prometheus # 旧数据存储目录

- --storage.tsdb.retention=720h # 旧数据保留时间

- --web.enable-lifecycle # 开启热加载

ports: # 容器暴露的端口

- containerPort: 9090

protocol: TCP # 协议

volumeMounts: # 容器挂载的数据卷

- mountPath: /etc/prometheus # 要挂载到哪里

name: prometheus-config # 挂载谁(与下面定义的volume对应)

- mountPath: /prometheus/

name: prometheus-data

- mountPath: /var/run/secrets/kubernetes.io/k8s-certs/etcd/

name: k8s-certs

volumes: # 数据卷定义

- name: prometheus-config # 名称

configMap: # 从configmap获取数据

name: prometheus-config # configmap的名称

- name: prometheus-data

persistentVolumeClaim:

claimName: prometheus-pvc

- name: k8s-certs

secret:

secretName: etcd-certs

[root@k8s-master kube-monitor]# kubectl apply -f kube-monitor-prometheus-deploy.yaml

deployment.apps/prometheus-server created

10、查看Prometheus是否部署成功

[root@k8s-master kube-monitor]# kubectl get pod -n kube-monitor

NAME READY STATUS RESTARTS AGE

alertmanager-b8bf48445-qgndp 1/1 Running 0 13m

grafana-server-847d9d6794-h6ddd 1/1 Running 0 14h

kube-state-metrics-7594ddfc96-js97z 1/1 Running 0 42m

node-exporter-h2hzl 1/1 Running 0 14h

prometheus-server-79659467f6-rzk6t 1/1 Running 0 3m22s

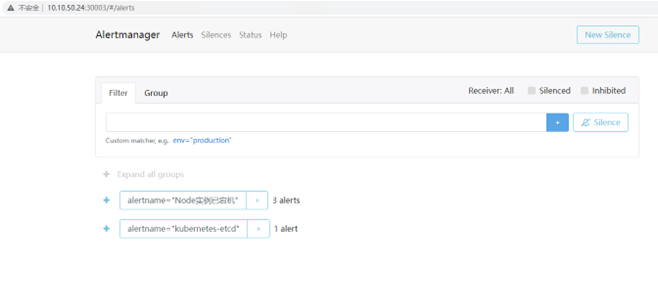

11、浏览器访问alertmanager

# 浏览器输入http://10.10.50.24:30003,如下图

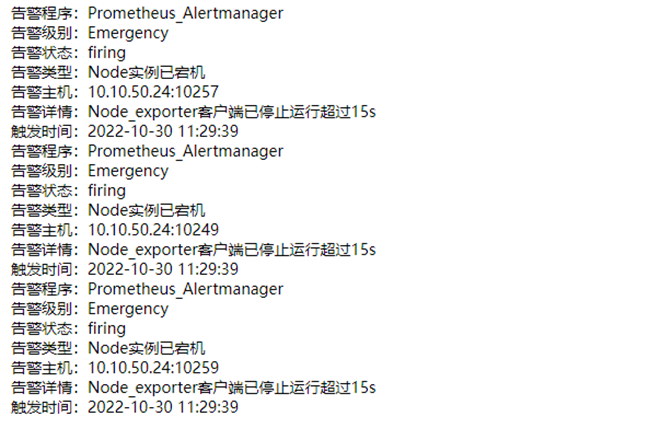

12、查看邮件告警

13、故障处理

注意:以下操作在生产环境慎重执行,可能会导致集群故障

kubeadm部署k8s集群,kube-controller-manager和kube-scheduler的监听IP默认为127.0.0.1,如果需要将其改为0.0.0.0用以提供外部访问,可分别修改对应的manifest文件。可按如下方法处理:

# kube-scheduler

[root@k8s-master kube-monitor]# sed -i 's/127.0.0.1/0.0.0.0/g' /etc/kubernetes/manifests/kube-scheduler.yaml

修改如下内容:

--bind-address==127.0.0.1修改成--bind-address=0.0.0.0

httpGet:

hosts: 127.0.0.1改成0.0.0.0

# kube-controller-manager

[root@k8s-master kube-monitor]# sed -i 's/127.0.0.1/0.0.0.0/g' /etc/kubernetes/manifests/kube-controller-manager.yaml

修改如下内容:

--bind-address==127.0.0.1修改成--bind-address=0.0.0.0

httpGet:

hosts: 127.0.0.1改成0.0.0.0

# 重启各节点的kubelet

[root@k8s-master kube-monitor]# systemctl restart kubelet

# kube-proxy

[root@k8s-master kube-monitor]# kubectl edit configmap kube-proxy -n kube-system

修改如下内容:

metricsBindAddress: ""修改成metricsBindAddress: "0.0.0.0"

# 删除Pod

[root@k8s-master kube-monitor]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-59697b644f-cvdfx 1/1 Running 0 15h

calico-node-mzprw 1/1 Running 0 15h

coredns-c676cc86f-l4z74 1/1 Running 0 15h

coredns-c676cc86f-m9zq5 1/1 Running 0 15h

etcd-k8s-master 1/1 Running 0 15h

kube-apiserver-k8s-master 1/1 Running 0 15h

kube-controller-manager-k8s-master 1/1 Running 0 2m50s

kube-proxy-w4dsl 1/1 Running 0 15h

kube-scheduler-k8s-master 1/1 Running 0 2m58s

[root@k8s-master kube-monitor]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-59697b644f-cvdfx 1/1 Running 0 15h

calico-node-mzprw 1/1 Running 0 15h

coredns-c676cc86f-l4z74 1/1 Running 0 15h

coredns-c676cc86f-m9zq5 1/1 Running 0 15h

etcd-k8s-master 1/1 Running 0 15h

kube-apiserver-k8s-master 1/1 Running 0 15h

kube-controller-manager-k8s-master 1/1 Running 0 3m44s

kube-proxy-zdlh5 1/1 Running 0 32s

kube-scheduler-k8s-master 1/1 Running 0 3m52s

若文章图片、下载链接等信息出错,请在评论区留言反馈,博主将第一时间更新!如本文“对您有用”,欢迎随意打赏,谢谢!

登录回复

prometheus 获取不到监控数据,

# kubectl logs -f pod/prometheus-server-b6fb95b8d-nnf56 -n kube-monitor

level=error ts=2023-05-16T16:08:27.079Z caller=klog.go:116 component=k8s_client_runtime func=ErrorDepth msg=\"pkg/mod/k8s.io/client-go@v0.21.0/tools/cache/reflector.go:167: Failed to watch *v1.Node: failed to list *v1.Node: nodes is forbidden: User \"system:serviceaccount:kube-monitor:monitor\" cannot list resource \"nodes\" in API group \"\" at the cluster scope\"

level=error ts=2023-05-16T16:08:31.737Z caller=klog.go:116 component=k8s_client_runtime func=ErrorDepth msg=\"pkg/mod/k8s.io/client-go@v0.21.0/tools/cache/reflector.go:167: Failed to watch *v1.Service: failed to list *v1.Service: services is forbidden: User \"system:serviceaccount:kube-monitor:monitor\" cannot list resource \"services\" in API group \"\" at the cluster scope\"

level=error ts=2023-05-16T16:08:38.315Z caller=klog.go:116 component=k8s_client_runtime func=ErrorDepth msg=\"pkg/mod/k8s.io/client-go@v0.21.0/tools/cache/reflector.go:167: Failed to watch *v1.Pod: failed to list *v1.Pod: pods is forbidden: User \"system:serviceaccount:kube-monitor:monitor\" cannot list resource \"pods\" in API group \"\" at the cluster scope\"